Reading "The Tiger that Isn't" by Michael Blastland & Andrew Dilnot (Amazon). Chapter on Risk pg 119-120 tells of Gerd Gigerenzer work on misleading statistics in medical diagnosis. This work really shocked me at the judgment errors that doctors can make when interpreting medical diagnoses.

Gigerenzer asked a group of physicians to tell him the chance of a patient truly having a condition (breast cancer) when a test (a mammogram) that was 90 per cent accurate at spotting those that had it, and 93 percent at spotting those that did not, came back positive. The condition affected 0.8% of women between 40-50 years. These figures are expressed as conditional probabilities.

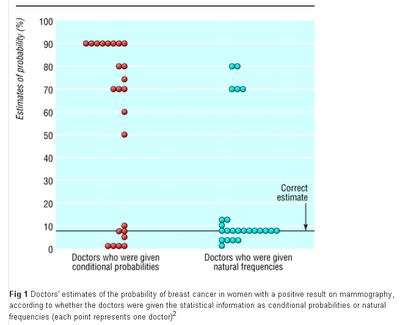

Of the 24 doctors to whom he gave this information, just 2 worked out correctly the chance of the patient really having the condition.

Actually more than 9 out 10 positive tests under these assumptions are false positives and the patient is in the clear.

Actually more than 9 out 10 positive tests under these assumptions are false positives and the patient is in the clear.However when expressed as natural frequencies most doctors got the correct result. (Fig 1).

Source: British Medical Journal, 2003

Two ways of representing the same statistical information

Conditional probabilities

The probability that a woman has breast cancer is 0.8%. If she has breast cancer, the probability that a mammogram will show a positive result is 90%. If a woman does not have breast cancer the probability of a positive result is 7%. Take, for example, a woman who has a positive result. What is the probability that she actually has breast cancer?

Natural frequencies

Eight out of every 1000 women have breast cancer. Of these eight women with breast cancer seven will have a positive result (one will have a false negative) on mammography. Of the 992 women who do not have breast cancer some 70 will still have a positive (false positive) mammogram. Take, for example, a sample of women who have positive mammograms. How many of these women actually have breast cancer?

Using Conditional probabilities the answer is not clear. Using natural frequencies things are a lot clearer. Of the 77 positives (7 true positives +70 false positives) only 1 in 11 (9%) will actually have breast cancer. Only one of the cases of breast cancer was missed - the one false negative in a 1000 women tested.Also from BMJ, 2003:-

The science fiction writer H G Wells predicted that in modern technological societies statistical thinking will one day be as necessary for efficient citizenship as the ability to read and write. How far have we got, a hundred or so years later? A glance at the literature shows a shocking lack of statistical understanding of the outcomes of modern technologies, from standard screening tests for HIV infection to DNA evidence. For instance, doctors with an average of 14 years of professional experience were asked to imagine using the Haemoccult test to screen for colorectal cancer. The prevalence of cancer was 0.3%, the sensitivity of the test was 50%, and the false positive rate was 3%. The doctors were asked: what is the probability that someone who tests positive actually has colorectal cancer? The correct answer is about 5%. However, the doctors' answers ranged from 1% to 99%, with about half of them estimating the probability as 50% (the sensitivity) or 47% (sensitivity minus false positive rate). If patients knew about this degree of variability and statistical innumeracy they would be justly alarmed.

More...

Relative risks

Women aged over 50 years are told that undergoing mammography screening reduces their risk of dying from breast cancer by 25%. Women in high risk groups are told that bilateral prophylactic mastectomy reduces their risk of dying from breast cancer by 80%.8 These numbers are relative risk reductions. The confusion produced by relative risks has received more attention in the medical literature than that of single event or conditional probabilities.9 10 Nevertheless, few patients realise that the impressive 25% figure means an absolute risk reduction of only one in 1000: of 1000 women who do not undergo mammography about four will die from breast cancer within 10 years, whereas out of 1000 women who do three will die.11 Similarly, the 80% figure for prophylactic mastectomy refers to an absolute risk reduction of four in 100: five in 100 women in the high risk group who do not undergo prophylactic mastectomy will die of breast cancer, compared with one in 100 women who have had a mastectomy. One reason why most women misunderstand relative risks is that they think that the number relates to women like themselves who take part in screening or who are in a high risk group. But relative risks relate to a different class of women: to women who die of breast cancer without having been screened.Summary points

- The inability to understand statistical information is not a mental deficiency of doctors or patients but is largely due to the poor presentation of the information

- Poorly presented statistical information may cause erroneous communication of risks, with serious consequences

- Single event probabilities, conditional probabilities (such as sensitivity and specificity), and relative risks are confusing because they make it difficult to understand what class of events a probability or percentage refers to

- For each confusing representation there is at least one alternative, such as natural frequency statements, which always specify a reference class and therefore avoid confusion, fostering insight

- Simple representations of risk can help professionals and patients move from innumeracy to insight and make consultations more time efficient

- Instruction in efficient communication of statistical information should be part of medical curriculums and doctors' continuing education

No comments:

Post a Comment